In this three-part series, we explore the security considerations when running coding agents in CI/CD environments, examine implementation strategies for GitHub Copilot and Claude Code, and demonstrate how runtime monitoring enhances the security posture of AI-powered development workflows.

GitHub Actions Powering AI: An Expanding Ecosystem

The integration of AI into CI/CD workflows is rapidly evolving beyond individual coding assistants. GitHub Next's Continuous AI project represents a broader vision where AI agents become integral to the entire software development lifecycle. This ecosystem, documented in the awesome-continuous-ai repository, showcases dozens of tools and frameworks that enable AI to operate autonomously within CI/CD pipelines - from code generation and testing to deployment and monitoring.

This proliferation of AI-powered CI/CD tools underscores the urgency of establishing proper security frameworks.

Why GitHub Actions Is the Natural Platform for Coding Agents

GitHub Actions has emerged as the ideal platform for running AI coding agents, thanks to its deep integration with the GitHub ecosystem. This tight coupling enables coding agents to seamlessly access repository content, read issues, analyze pull requests, and propose changes - all within a unified platform. The compute infrastructure provided by GitHub Actions offers clean, ephemeral environments perfect for AI workloads, while the marketplace ecosystem accelerates deployment through pre-built actions.

GitHub's API-first design particularly benefits coding agents. Actions like GitHub Copilot and Claude Code can leverage native GitHub APIs to understand project context, access issue discussions, and create pull requests with proper formatting and metadata. This integration creates a powerful development experience where AI agents feel like natural extensions of the GitHub workflow rather than external tools.

However, this same deep integration requires careful security consideration. Coding agents operating in GitHub Actions inherit significant privileges through GITHUB_TOKEN and other secrets, making proper security controls essential for protecting your software supply chain.

Beyond Traditional Security: Why Your EDR Can't Protect CI/CD

Traditional EDR solutions face fundamental limitations in CI/CD environments, particularly when securing AI coding agents. These tools primarily rely on detecting known bad behavior - an approach that fails against novel CI/CD attack patterns. The recent tj-actions incident perfectly illustrates this gap: attackers used gist.githubusercontent.com, a well-known GitHub-owned endpoint, to download malicious code. Traditional EDR solutions couldn't flag this as suspicious because the domain itself is legitimate.

The challenge goes deeper than domain reputation. EDR solutions lack awareness of CI/CD context: they can't distinguish between a legitimate GitHub Copilot downloading dependencies for code analysis and a compromised agent downloading malicious packages. They miss the critical relationship between workflow triggers, coding agent actions, and the resulting system behaviors that define security boundaries in AI-powered pipelines.

This context gap becomes critical when securing coding agents. When GitHub Copilot or Claude Code executes commands based on issue descriptions or pull request comments, traditional security tools see only the low-level system calls—not the AI decision chain that led to those actions. This blindness to CI/CD semantics makes it impossible to detect sophisticated attacks targeting coding agent logic.

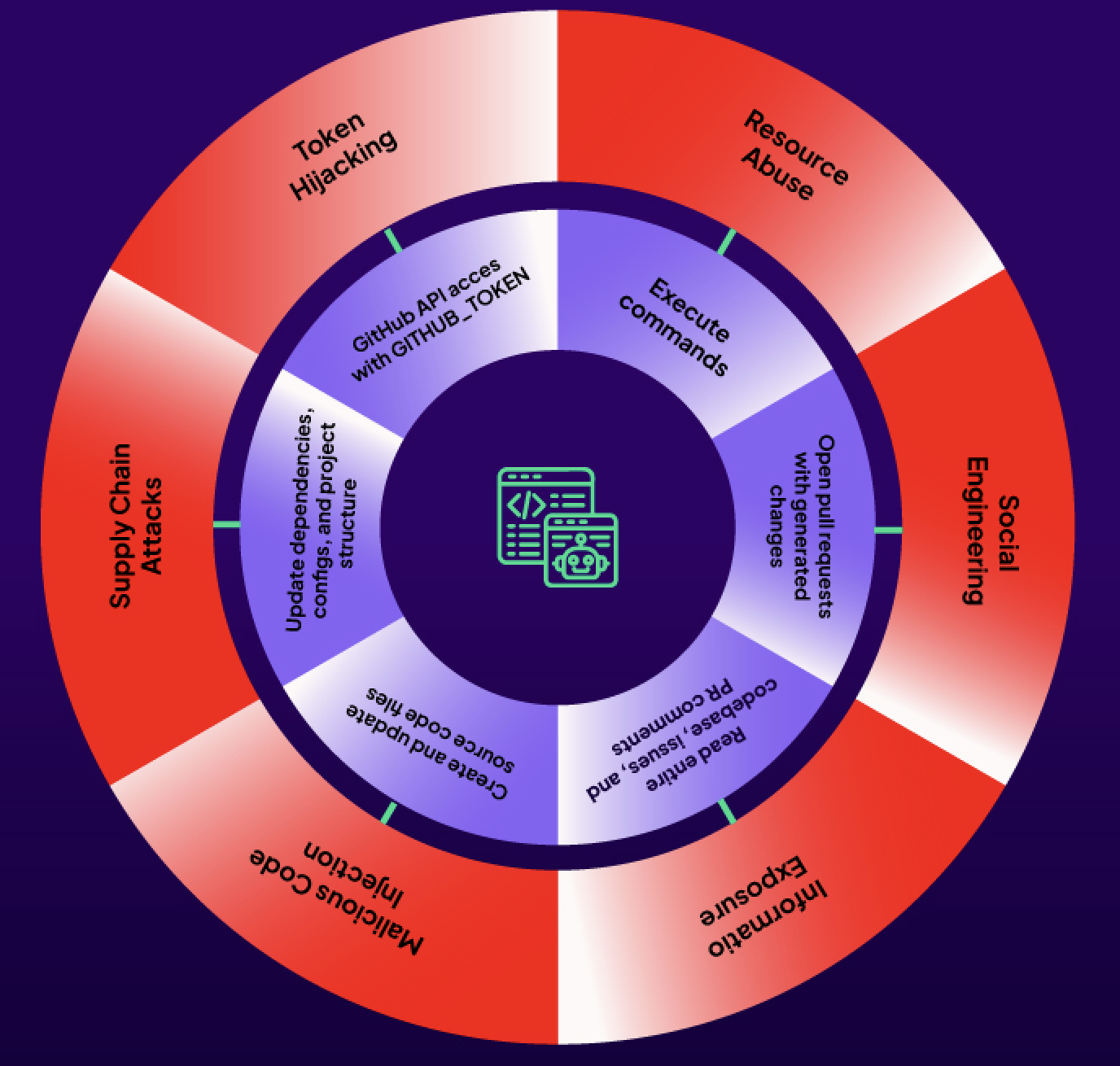

The Anatomy of Coding Agent-Specific Security Risks

While both GitHub Copilot and Claude Code operate with specific security constraints, they possess elevated GitHub API privileges that expand the attack surface beyond read-only operations. These agents can:

- Create feature branches programmatically

- Open and update pull requests with code changes

- As the agent works, it pushes commits to a draft pull request.

- Update issues with comments and labels

- Access repository content and metadata through the GitHub API

The real security risk lies in behavioral manipulation - tricking agents into generating or executing malicious code within the CI/CD pipeline itself. While agents cannot directly modify protected branches, they can create pull requests containing malicious code that, once approved by a human reviewer who may not spot subtle vulnerabilities, gets merged into the main codebase. Furthermore, attackers can also trick coding agents to generate code changes and issue / pull request interactions such as writing comments that can trigger GitHub Actions workflow runs with malicious code, leading to CI/CD supply chain attacks.

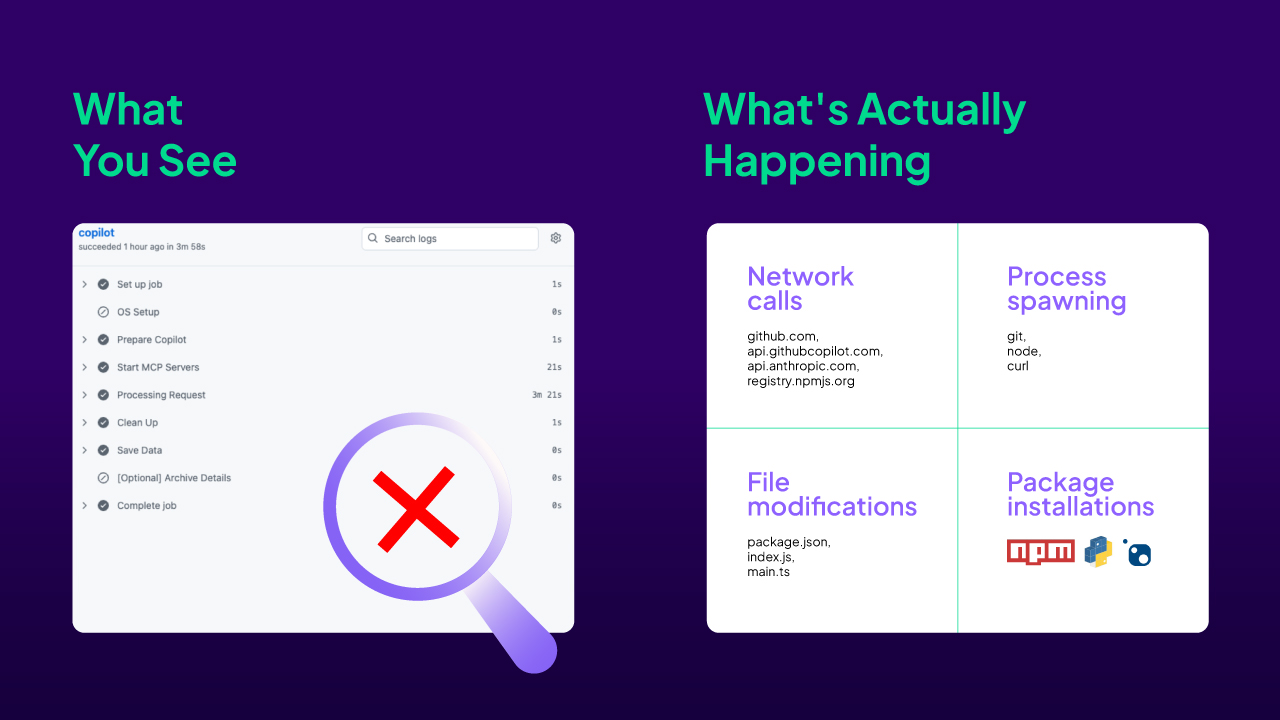

The Visibility Gap: What Happens Behind the Scenes?

When coding agents operate in CI/CD environments, they generate and execute code autonomously based on natural language instructions. However, enterprises face a critical visibility gap - they cannot see what these agents are doing in real-time. Unlike human developers who commit code through pull requests with clear diffs, coding agents can generate, modify, and execute code within the CI/CD pipeline without providing transparency into their decision-making process.

Traditional CI/CD logs only show high-level job outcomes, not the granular activities happening within each step. When an agent says "I'll optimize your build process," organizations have no insight into what optimizations are actually being implemented or what system resources are being accessed.

Runtime Monitoring: Bridging the Visibility Gap

Securing coding agents requires more than just access controls - it demands real-time visibility into their operations. This is where solutions like Harden-Runner provide essential transparency. Unlike traditional security tools, Harden-Runner is designed specifically for CI/CD environments and provides:

- Real-Time Visibility: Every action taken by agent-generated code is monitored and logged, providing complete transparency into what's happening behind the scenes.

- Behavioral Tracking: See exactly what files are accessed, what processes are spawned, and what network connections are attempted by agent-generated code.

- Context-Aware Monitoring: Every action is correlated with the specific workflow, job, and step, showing exactly which coding agent action triggered which system behavior.

- Instant Alerts: When agent-generated code exhibits suspicious behavior, security teams are notified immediately with full context.

This visibility transforms coding agents from black boxes into transparent automation tools.

Building Secure AI-Powered Development Pipelines

The integration of coding agents into CI/CD represents a fundamental shift in software development. These tools offer tremendous productivity benefits, but they also introduce novel security considerations that require purpose-built solutions. Organizations adopting GitHub Copilot, Claude Code, or other AI agents must implement appropriate runtime protections to ensure their automation doesn't become an attack vector.

In the following articles, we will demonstrate real-world workflows where Harden-Runner is integrated with GitHub Copilot and Claude Code pipelines - giving teams visibility, control, and peace of mind.

The future of software development is AI-powered, and security must evolve to match. By implementing proper runtime monitoring and understanding coding agent-specific risks, organizations can harness AI's power while maintaining robust security postures.

.png)

.png)

.png)